This paper presents a comprehensive approach for optimizing industrial spare parts inventory using advanced data analysis techniques, including standardization, Principal Component Analysis (PCA), clustering methods, normality testing,... more

Nowadays, electrical drives generally associate inverter and induction machine. Thus, these two elements must be taken into account in order to provide a relevant diagnosis of these electrical systems. In this context, the paper presents... more

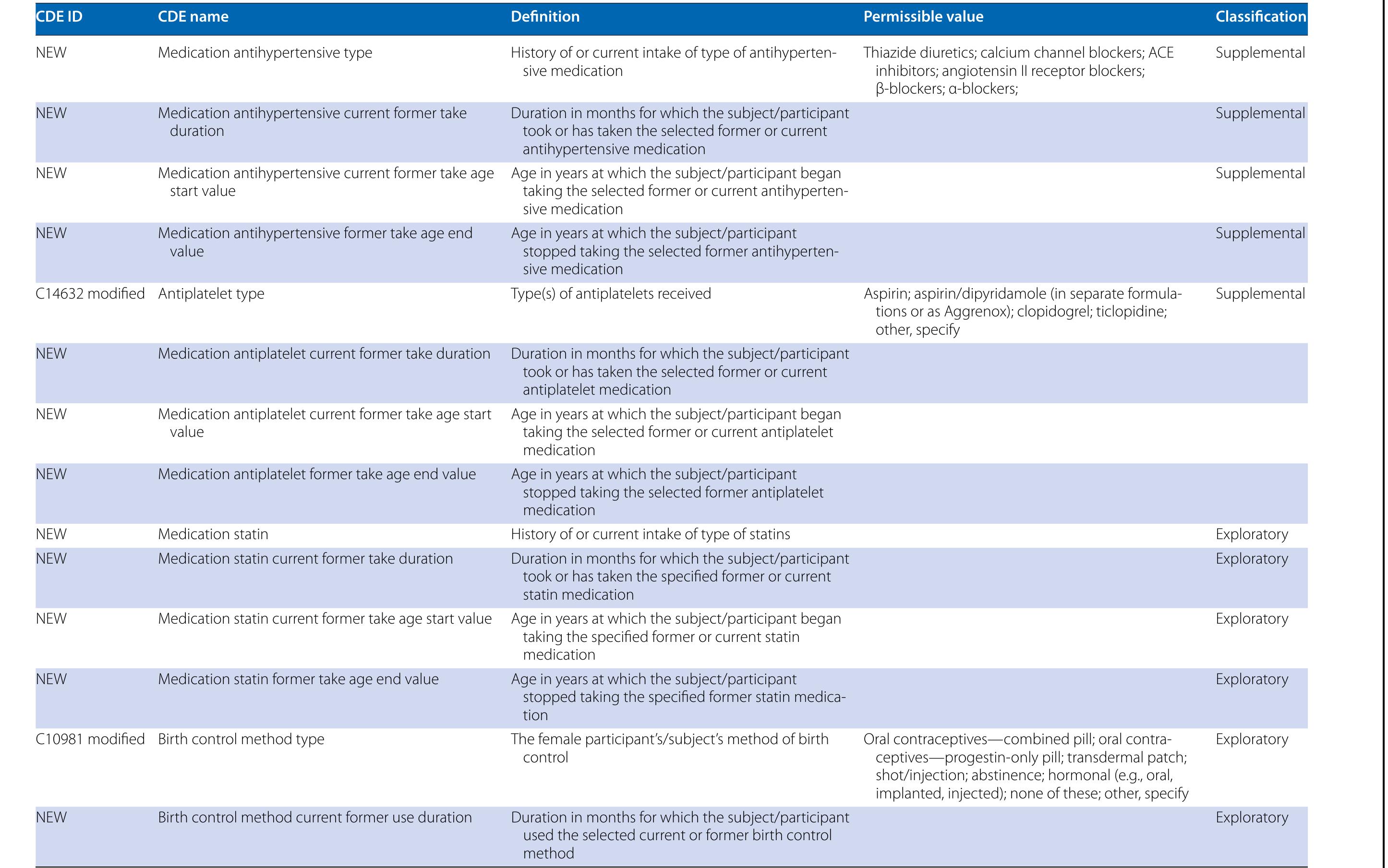

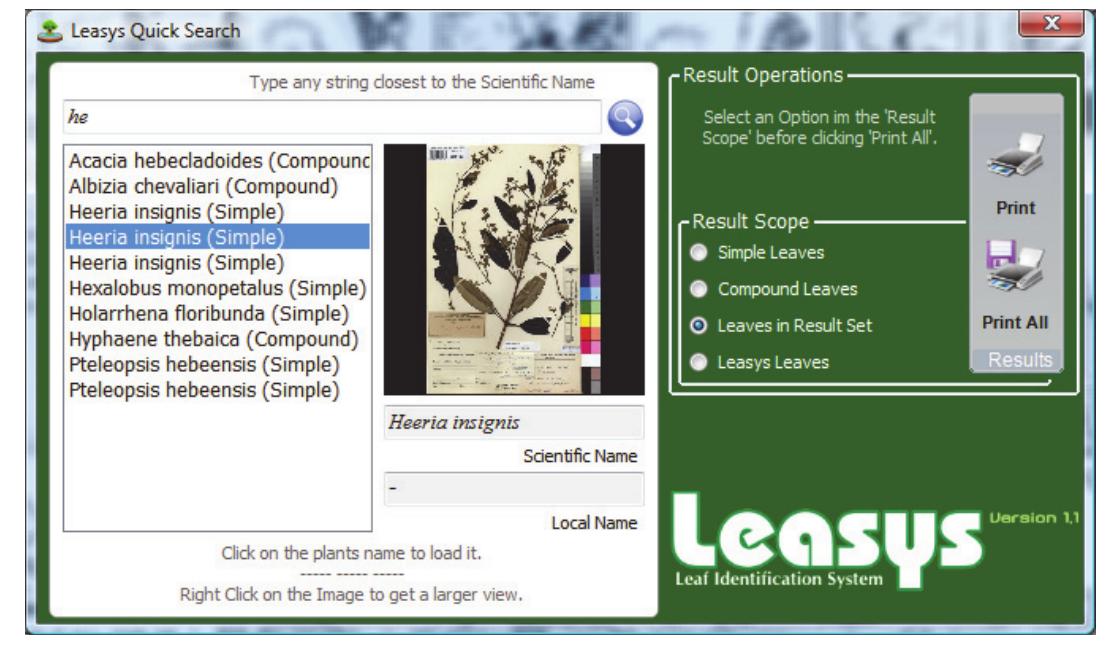

Introduction: Variability in usage and definition of data characteristics in previous cohort studies on unruptured intracranial aneurysms (UIA) complicated pooling and proper interpretation of these data. The aim of the National Institute... more

In the effort of Governments worldwide to effectively transform manual into electronic services, semantic interoperability issues pose as a key challenge: system-to-system interaction asks for standardized data definitions, codification... more

The advances in diagnostic and treatment technology are responsible for a remarkable transformation in the internal medicine concept with the establishment of a new idea of personalized medicine. Inter- and intra-patient tumor... more

Understanding the biogeography of past and present fire events is particularly important in tropical forest ecosystems, where fire rarely occurs in the absence of human ignition. Open science databases have facilitated comprehensive and... more

Understanding the biogeography of past and present fire events is particularly important in tropical forest ecosystems, where fire rarely occurs in the absence of human ignition. Open science databases have facilitated comprehensive and... more

Introduction: Variability in usage and definition of data characteristics in previous cohort studies on unruptured intracranial aneurysms (UIA) complicated pooling and proper interpretation of these data. The aim of the National Institute... more

Nowadays, electrical drives generally associate inverter and induction machine. Thus, these two elements must be taken into account in order to provide a relevant diagnosis of these electrical systems. In this context, the paper presents... more

To develop an infrastructure for structured and automated collection of interoperable radiation therapy (RT) data into a national clinical quality registry. Materials and methods: The present study was initiated in 2012 with the... more

The growing food demand and decreasing size of the rural areas require striving for optimal results of production. To achieve this, we can use decision support systems. The critical point of the application is the availability of proper... more

This work presents the development of an XML based language for the standardization of the information needed to build molecular rovibrational hamiltonians. This Molecular Spectroscopic Simulations Markup Language (MSSML) allows to... more

The scholars, Frederick Burwick and James McKusick, published at Oxford University Press, Faustus from the German of Goethe translated by Samuel Taylor Coleridge in 2007. This edition articulated the result that Samuel Taylor Coleridge is... more

Discovery of the chronological or geographical distribution of collections of historical text can be more reliable when based on multivariate rather than on univariate data because multivariate data provide a more complete description.... more

This work presents the development of an XML based lan- guage for the standardization of the information needed to build molecular rovibrational hamiltonians. This Molecular Spectroscopic Simulations Markup Language (MSSML) allows to... more

The Hungarian Soil Information and Monitoring System (SIM) covers the whole country and provides opportunity to create similar information systems for the natural resources (atmosphere, supply of water, flora, biological resources etc).... more

The Hungarian Soil Information and Monitoring System (SIM) covers the whole country and provides opportunity to create similar information systems for the natural resources (atmosphere, supply of water, flora, biological resources etc).... more

The invasion of new technologies combined with the high cost for running shop force enterprises to search for new sales methods. Network applications and ICT (Information and Communication Technology) can help achieve e-commerce goals. In... more

The new technologies invasion combined with the high cost for running a shop force enterprises to search for new sales methods. Network applications and ICT (Information and Communication Technology) can help achieve e-commerce goals. In... more

The standard scientific methodology in linguistics is empirical testing of falsifiable hypotheses. As such the process of hypothesis generation is central, and involves formulation of a research question about a domain of interest and... more

In this paper, we describe the model and scenarios, based on the Semantic Web foundation, for decision support system (dss) for grain drying processes. During the creation of the dss model we have considered key knowledge bottle necks for... more

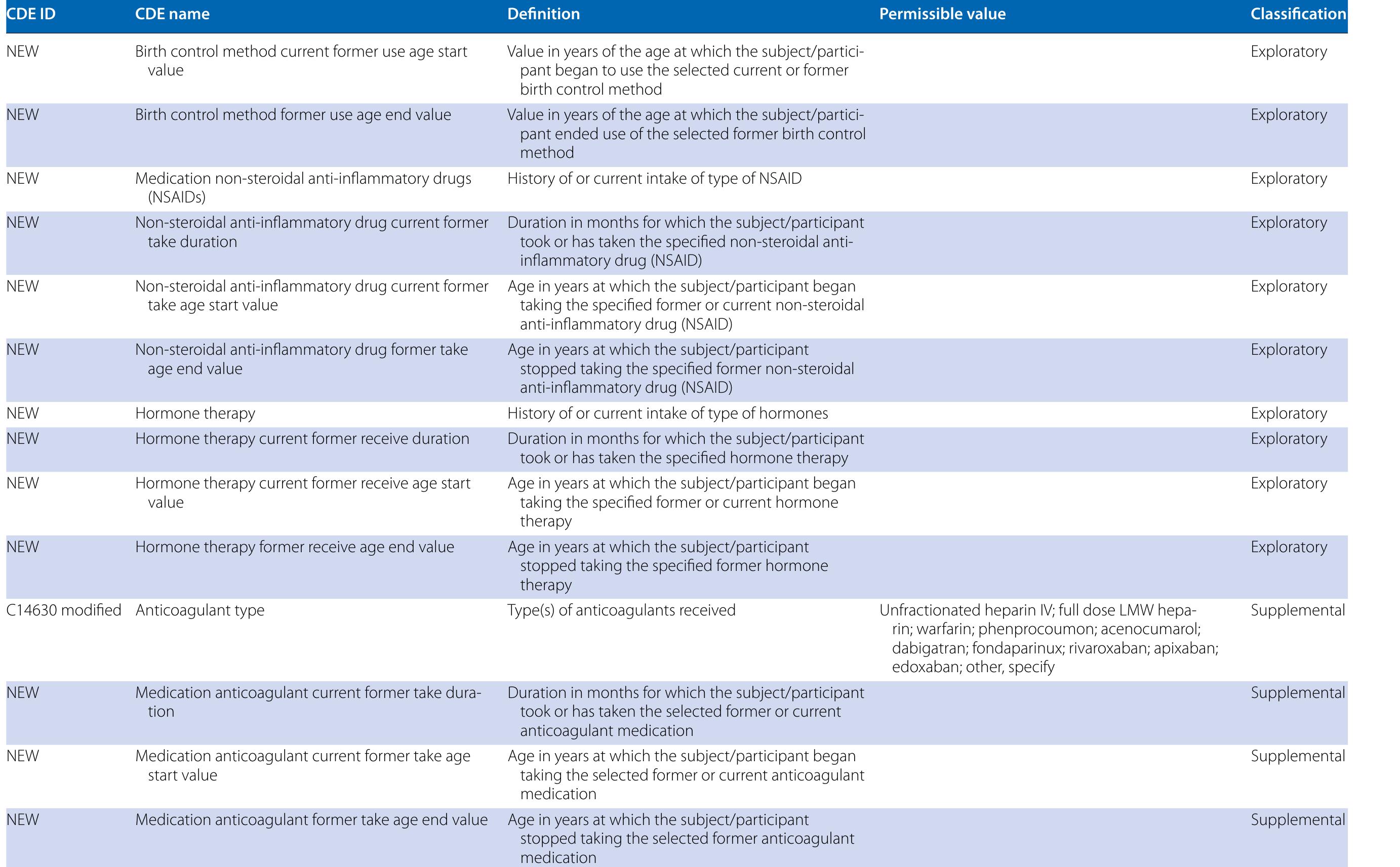

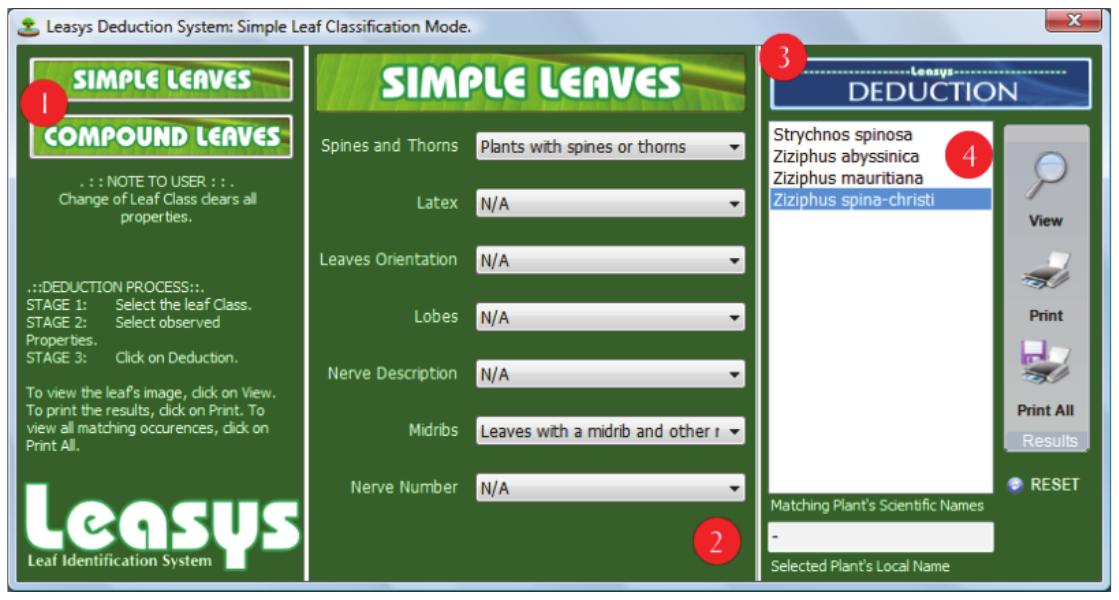

In an attempt to make identification of plants easy and less cumbersome, computer-based software called Leasys was developed. It is a computerized version of a field key prepared for on-the-spot identification of savanna tree species in... more

In an attempt to make identification of plants easy and less cumbersome, computer-based software called Leasys was developed. It is a computerized version of a field key prepared for on-the-spot identification of savanna tree species in... more

Discovery of the chronological or geographical distribution of collections of historical text can be more reliable when based on multivariate rather than on univariate data because multivariate data provide a more complete description.... more

The proliferation of computational technology has generated an explosive production of electronically encoded information of all kinds. In the face of this, traditional philological methods for search and interpretation of data have... more

The proliferation of computational technology has generated an explosive production of electronically encoded information of all kinds. In the face of this, traditional paper-based methods for search and interpretation of data have been... more

The proliferation of computational technology has generated an explosive production of electronically encoded information of all kinds. In the face of this, traditional philological methods for search and interpretation of data have been... more

Where the variables selected for cluster analysis of linguistic data are measured on different numerical scales, those whose scales permit relatively larger values can have a greater influence on clustering than those whose scales... more

Nowadays, data integrity and data standardization are significant topics for information retrieval systems and also for digital libraries. Although, many standards (such as VIAF, AACR2 and MARC) and institutional regulations developed for... more

In my previous article entitled, " Hierarchical and Non-Hierarchical Linear and Non-Linear Clustering Methods to " Shakespeare Authorship Question " I used Mean Proximity, as a linear hierarchical clustering method and Principal... more

Matrix In a previous tutorial article I looked at a proximity coefficient and, in the light of that proximity created a vector-distance matrix and used it to construct a hierarchical tree using different hierarchical clustering methods... more

The scholars, Frederick Burwick and James McKusick, published at Oxford University Press, Faustus from the German of Goethe translated by Samuel Taylor Coleridge in 2007. This edition articulated the result that Samuel Taylor Coleridge is... more

This paper addresses an issue that has a fundamental bearing on the validity of analytical results based on such data: sparsity. The discussion is in three main parts. The first part shows how a particular class of computational... more

The present chapter deals with one type of analytical tool: exploratory multivariate analysis. The discussion is in six main parts. The first part is the present introduction, the second explains what is meant by exploratory... more

The proliferation of computational technology has generated an explosive production of electronically encoded information of all kinds. In the face of this, traditional philological methods for search and interpretation of data have... more

Where the variables selected for cluster analysis of linguistic data are measured on different numerical scales, those whose scales permit relatively larger values can have a greater influence on clustering than those whose scales... more