Key research themes

1. How can neural architecture search (NAS) methods efficiently discover optimized architectures under resource or search space constraints?

This research area focuses on improving the efficiency and effectiveness of automated neural architecture search (NAS) to discover neural network models optimized not only for accuracy but also for practical deployment constraints like computational resources, latency, and search costs. It matters because NAS traditionally demands extensive computational resources and often neglects real-world constraints critical for wide adoption in edge devices and embedded systems. Recent advances explore resource-aware reward functions, search space pruning, and fast search algorithms to address these bottlenecks.

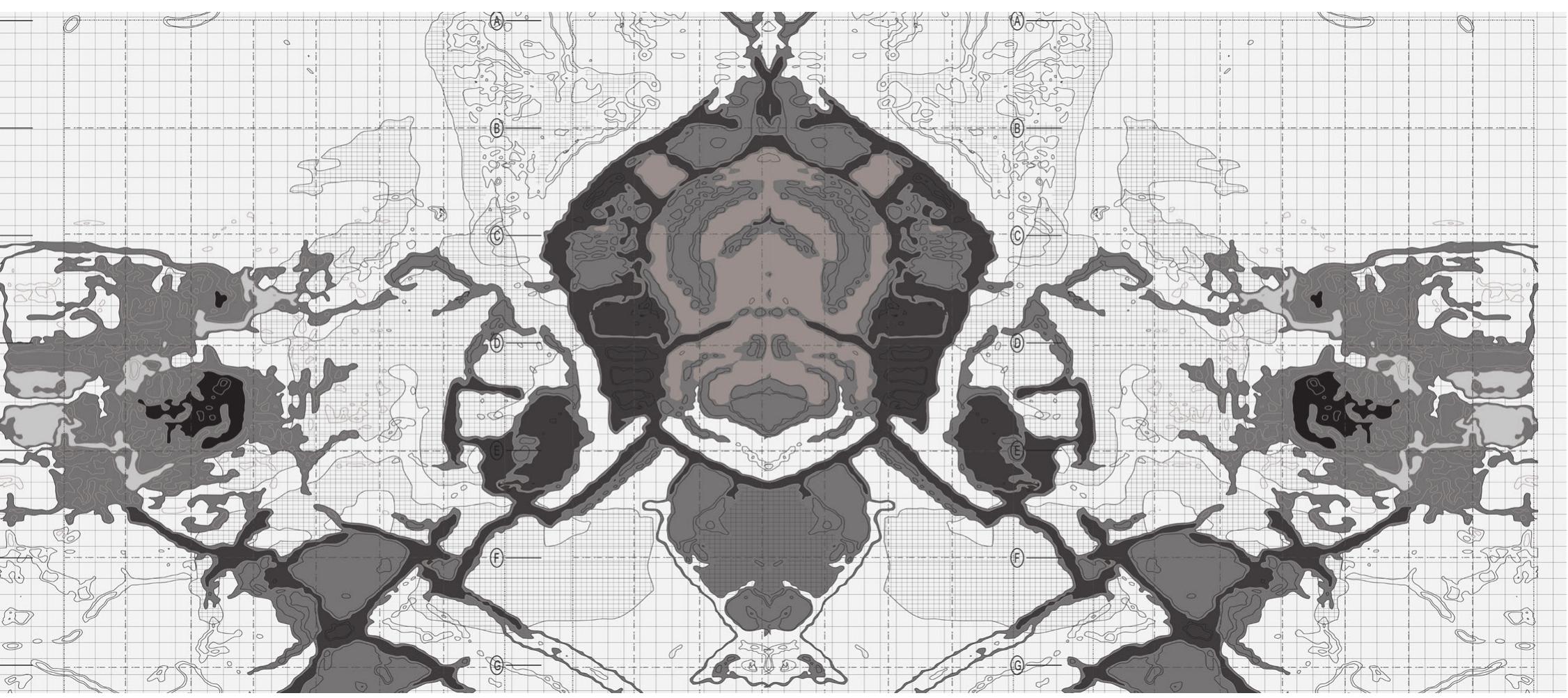

2. What are biologically inspired or brain-inspired principles that can inform neural architectures and artificial cognitive systems?

A large body of research investigates how neural architectures and cognitive models can be inspired by biological neural circuits and brain function to enhance artificial intelligence. This includes studying functional neuronal units that support perception, memory, and language, as well as cognitive architectures designed to mirror human cognition. Understanding these foundations matters for developing AI systems with improved interpretability, generalization, and potentially self-awareness—moving beyond black-box ANNs towards more explainable and human-like intelligence.

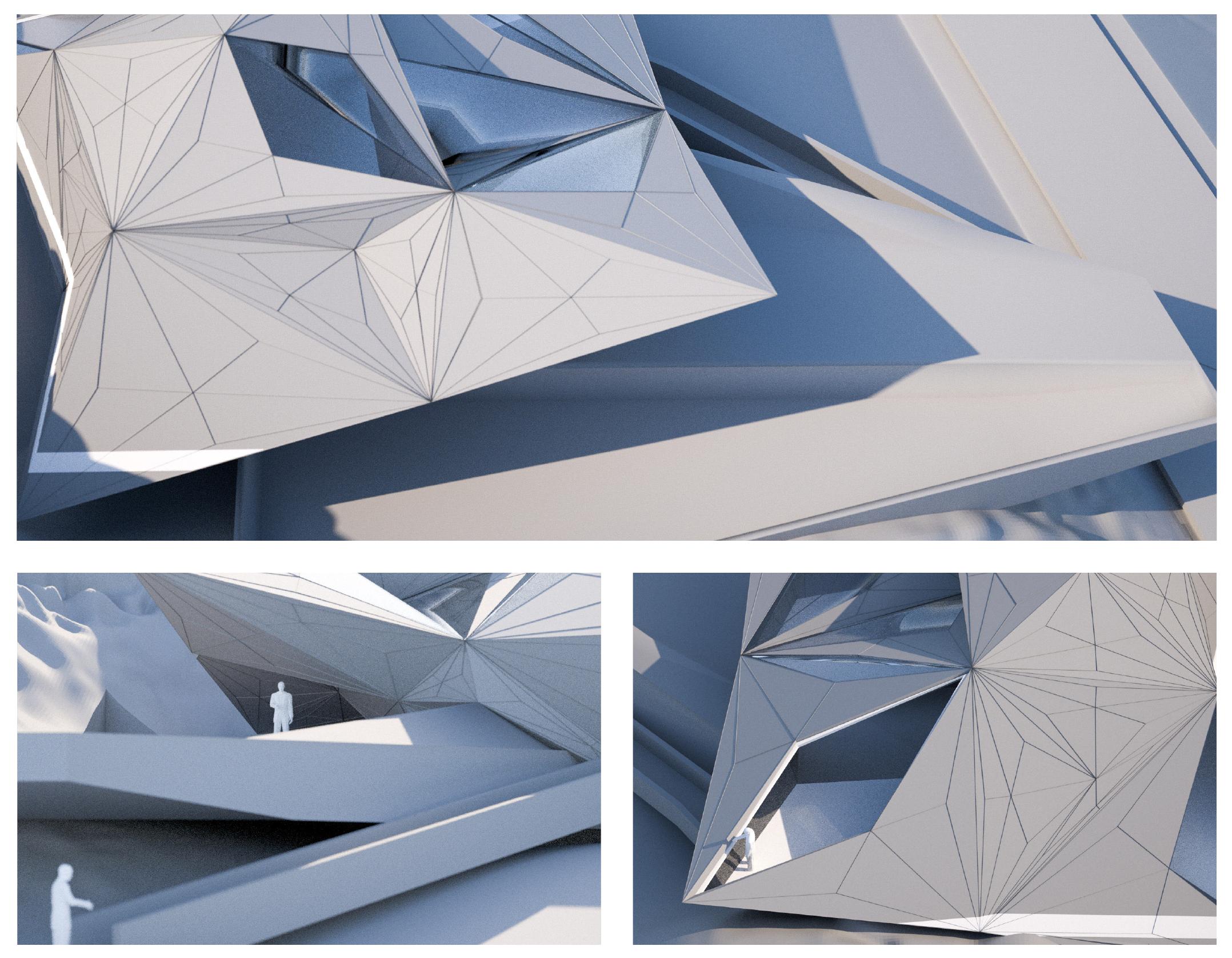

3. How can neural architectures and advanced learning models leverage alternative structural motifs and representations to improve learning performance and interpretability?

Exploring neural architectures beyond traditional feedforward CNNs and fully connected layers, such as tree architectures or graph-based models, can offer more efficient biological plausibility, interpretability, and performance gains. Simultaneously, alternative representations like 3D graphs permit richer modeling of complex domains like architecture design. Investigations into such structural innovations can bridge the gap between artificial models and their biological counterparts, while opening new avenues for better generalization and reduced computational overhead.