This paper presents an approach to automatic head movement detection and classification in data from a corpus of video-recorded face-to-face conversations in Danish involving 12 different speakers. A number of classifiers were trained... more

This paper presents an analysis of the temporal alignment between head movements and associated speech segments in the NOMCO corpus of first encounter dialogues [1]. Our results show that head movements tend to start slightly before the... more

his paper presents the multimodal corpora that are being collected and annotated in the Nordic NOMCO project. The corpora will be used to study communicative phenomena such as feedback, turn management and sequencing. They already include... more

This paper discusses nodding as one of the most significant means of feedback signalling in human conversations. It focuses on nodding in Estonian first encounter conversations, and compares nodding with similar feedback behaviour in... more

In this paper we describe the goals of the Estonian corpus collection and analysis activities, and introduce the recent collection of Estonian First Encounters data. The MINT project aims at deepening our understanding of the... more

The paper compares how feedback is expressed via speech and head movements in comparable corpora of first encounters in three Nordic languages: Danish, Finnish and Swedish. The three corpora have been collected following common... more

This paper describes the collection and annotation of comparable multimodal corpora for Nordic languages in a project involving research groups from Denmark, Estonia, Finland and Sweden. The goal of the project is to provide annotated... more

This article presents the Danish NOMCO Corpus, an annotated multimodal collection of video-recorded first acquaintance conversations between Danish speakers. The annotation includes speech transcription including word boundaries, and... more

This paper is about the automatic recognition of head movements in videos of face-to-face dyadic conversations. We present an approach where recognition of head movements is casted as a multimodal frame classification problem based on... more

This paper presents an analysis of the temporal alignment between head movements and associated speech segments in the NOMCO corpus of first encounter dialogues [1]. Our results show that head movements tend to start slightly before the... more

This paper describes the collection and annotation of comparable multimodal corpora for Nordic languages in a project involving research groups from Denmark, Estonia, Finland and Sweden. The goal of the project is to provide annotated... more

This paper describes the collection and annotation of comparable multimodal corpora for Nordic languages in a project involving research groups from Denmark, Estonia, Finland and Sweden. The goal of the project is to provide annotated... more

We present a method to support the annotation of head movements in video-recorded conversations. Head movement segments from annotated multimodal data are used to train a model to detect head movements in unseen data. The resulting... more

This article presents the Danish NOMCO Corpus, an annotated multimodal collection of video-recorded first acquaintance conversations between Danish speakers. The annotation includes speech transcription including word boundaries, and... more

In this article, we compare feedback-related multimodal behaviours in two different types of interactions: first encounters between two participants who do not know each in advance, and naturally-occurring conversations between two and... more

The paper compares how feedback is expressed via speech and head movements in comparable corpora of first encounters in three Nordic languages: Danish, Finnish and Swedish. The three corpora have been collected following common... more

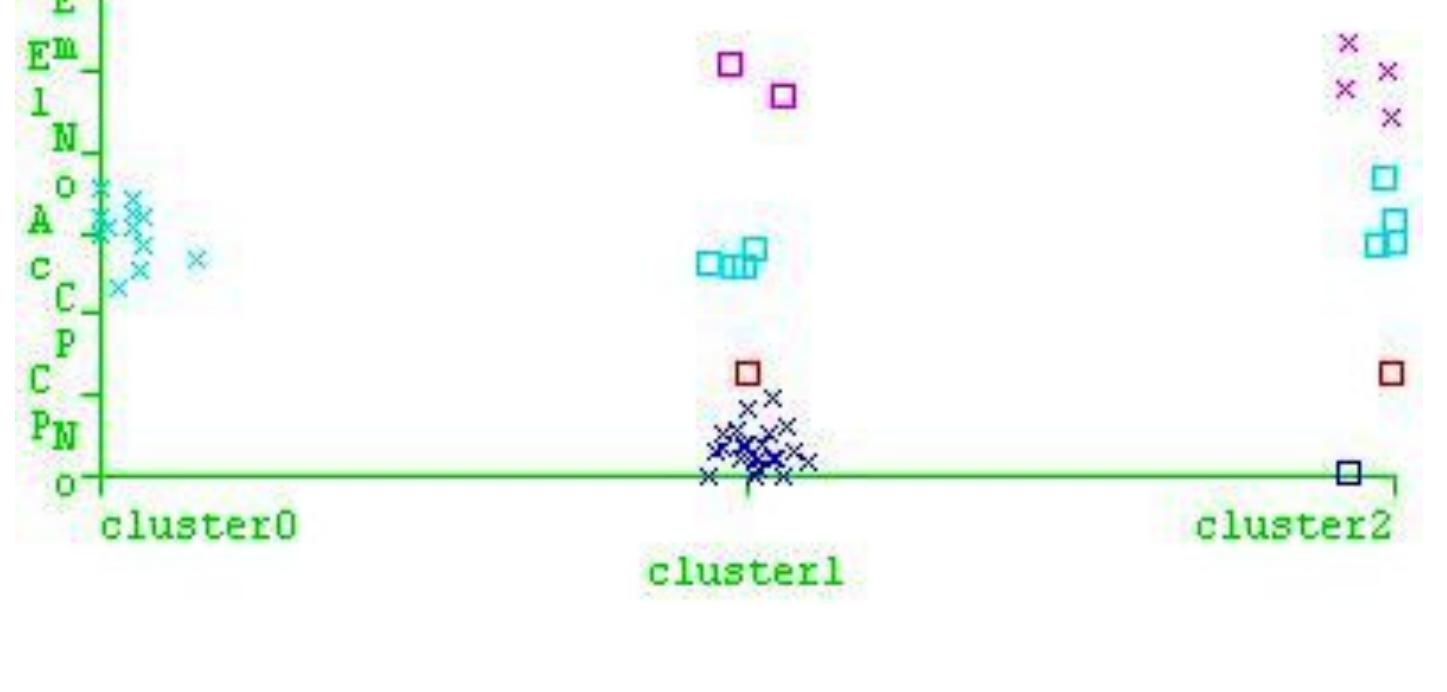

This paper deals with the results of a machine learning experiment conducted on annotated gesture data from two case studies (Danish and Estonian). The data concern mainly facial displays, that are annotated with attributes relating to... more

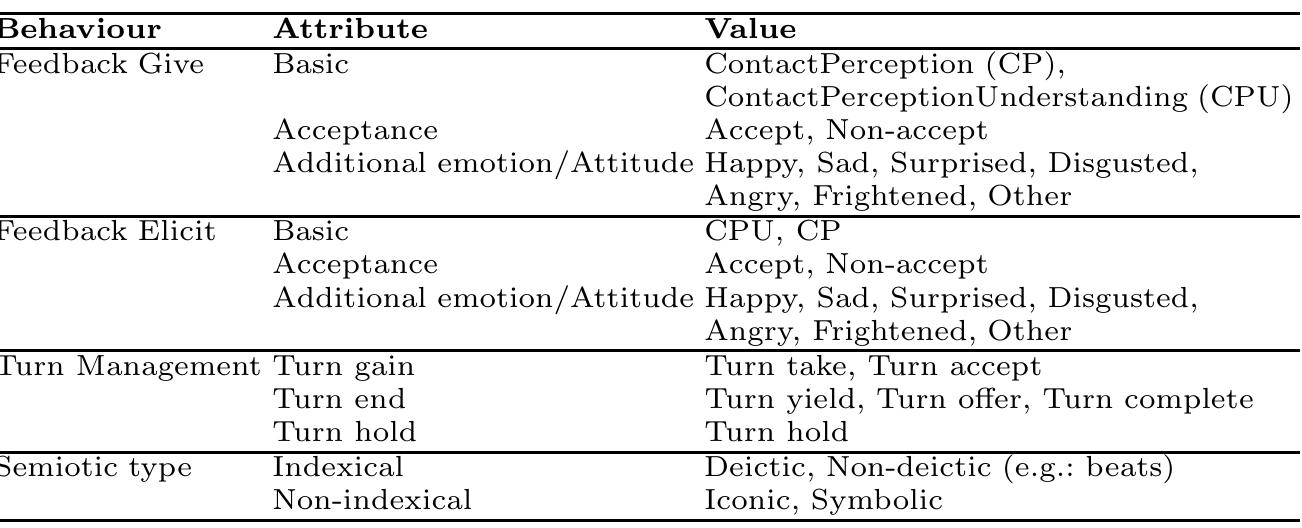

This paper deals with a multimodal annotation scheme dedicated to the study of gestures in interpersonal communication, with particular regard to the role played by multimodal expressions for feedback, turn management and sequencing. The... more

The paper is an investigation of the reusability of the annotations of head movements in a corpus in a language to predict the feedback functions of head movements in a comparable corpus in another language. The two corpora consist of... more

This paper deals with the way in which feedback is expressed through speech and gestures in the Danish NOMCO corpus of dyadic first encounters. The annotation includes the speech transcription as well as attributes concerning shape and... more

We present a method to support the annotation of head movements in video-recorded conversations. Head movement segments from annotated multimodal data are used to train a model to detect head movements in unseen data. The resulting... more

This paper presents an approach to automatic head movement detection and classification in data from a corpus of video-recorded face-to-face conversations in Danish involving 12 different speakers. A number of classifiers were trained... more

This paper is about the automatic recognition of head movements in videos of face-to-face dyadic conversations. We present an approach where recognition of head movements is casted as a multimodal frame classification problem based on... more

In human communication, people adapt to each other and jointly activate behavior in different ways. In this pilot study, focusing on one individual (Cf2) in four interactions two types of co-activation, ie repetition and reformulation in... more

In human communication, people adapt to each other and jointly activate behavior in different ways. In this pilot study, focusing on one individual (Cf2) in four interactions two types of co-activation, ie repetition and reformulation in... more

This paper presents a study of multimodal communication in spontaneous “getting to know each other conversations”. The study focuses on repeated head movements (head-nods and head-shakes) and the speech co-occurring with them. The main... more

This paper presents an approach to automatic head movement detection and classification in data from a corpus of video-recorded face-to-face conversations in Danish involving 12 different speakers. A number of classifiers were trained... more

The paper compares how feedback is expressed via speech and head movements in comparable corpora of first encounters in three Nordic languages: Danish, Finnish and Swedish. The three corpora have been collected following common... more

The paper compares how feedback is expressed via speech and head movements in comparable corpora of first encounters in three Nordic languages: Danish, Finnish and Swedish. The three corpora have been collected following common... more

The paper compares how feedback is expressed via speech and head movements in comparable corpora of first encounters in three Nordic languages: Danish, Finnish and Swedish. The three corpora have been collected following common... more

This paper discusses multimodal feedback signalling in Finnish first encounter conversations, especially the use of head nodding to signal shared understanding of the presented information. The goal of the paper is to study the... more

The paper compares how feedback is expressed via speech and head movements in comparable corpora of first encounters in three Nordic languages: Danish, Finnish and Swedish. The three corpora have been collected following common... more

The MUMIN multimodal coding scheme was originally created to experiment with annotation of multimodal communication in short clips from movies and in video clips of interviews taken from Swedish, Finnish and Danish television... more