Key research themes

1. How can contrastive divergence algorithms be improved to reduce bias and enhance convergence properties in training Restricted Boltzmann Machines?

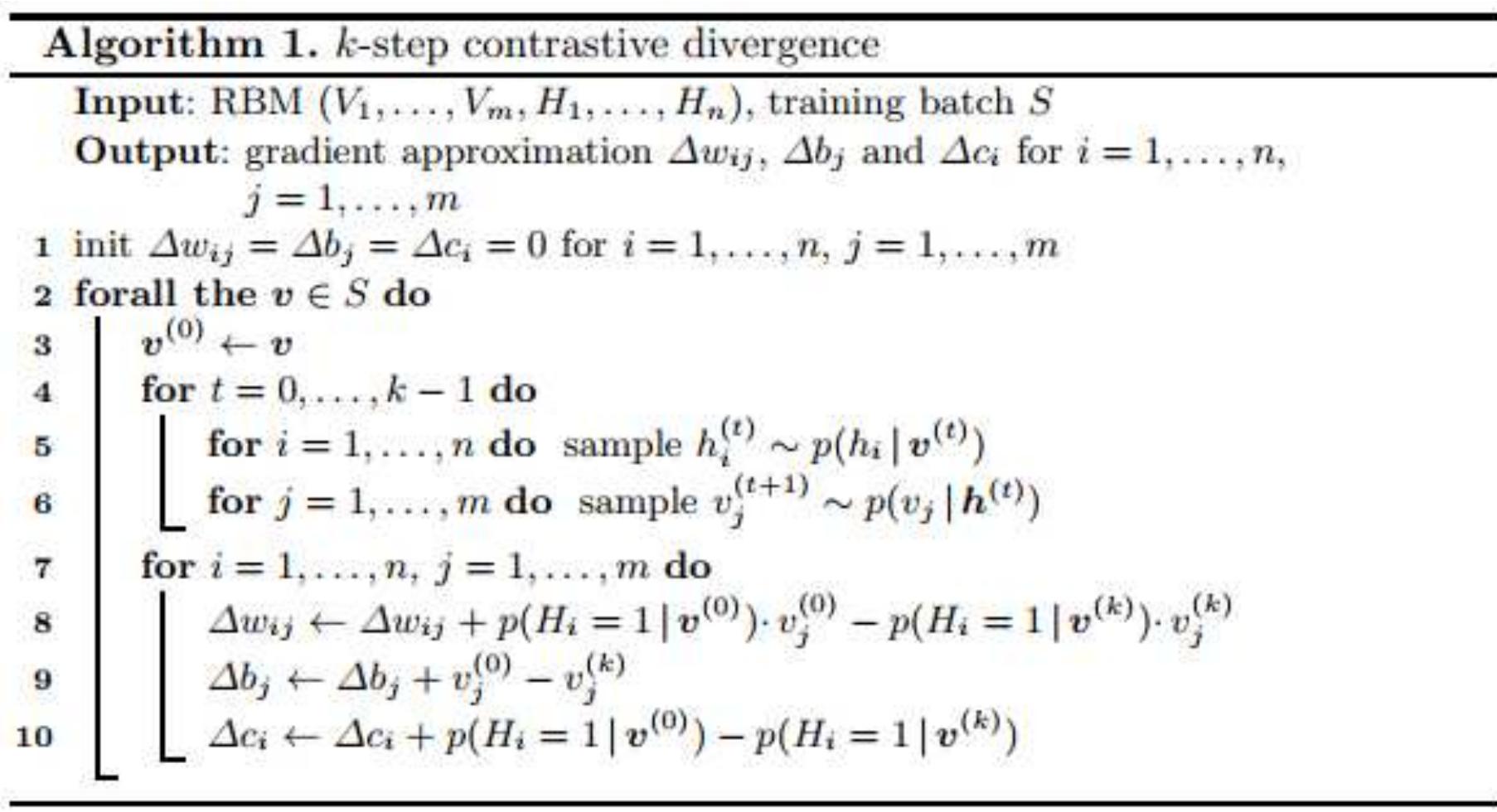

This research area focuses on addressing the inherent bias and convergence issues of contrastive divergence (CD) methods used to train Restricted Boltzmann Machines (RBMs). Despite their practical success, standard CD and its variants can yield biased gradient estimates, sometimes causing divergence or poor likelihood maximization. Enhancements or modifications to the CD framework aim to reduce such biases, improve likelihood estimations, and provide reliable stopping criteria to ensure more stable and accurate learning outcomes.

2. What alternative criteria and methods can be employed to monitor and improve the stopping point during contrastive divergence training of Restricted Boltzmann Machines?

Since CD algorithms can exhibit non-monotonic behavior in likelihood during training, reliable stopping criteria beyond reconstruction error are critical. This area investigates new metrics, neighborhood-based measures, and auxiliary functionals that can accurately detect when the model is optimally trained, thereby preventing overfitting or divergence and improving practical training robustness.

3. How are various noise-contrastive estimation (NCE) methods related to contrastive divergence and maximum likelihood estimation for unnormalised probabilistic models?

This research theme clarifies theoretical connections between NCE variants, notably ranking NCE (RNCE) and conditional NCE (CNCE), with classical maximum likelihood estimation employing importance sampling and with contrastive divergence used in energy-based model learning. By establishing equivalences and special case relationships, it enables cross-fertilization of analytical insights and algorithmic improvements across these estimation methodologies.