Figure 7 – uploaded by Remo Suppi

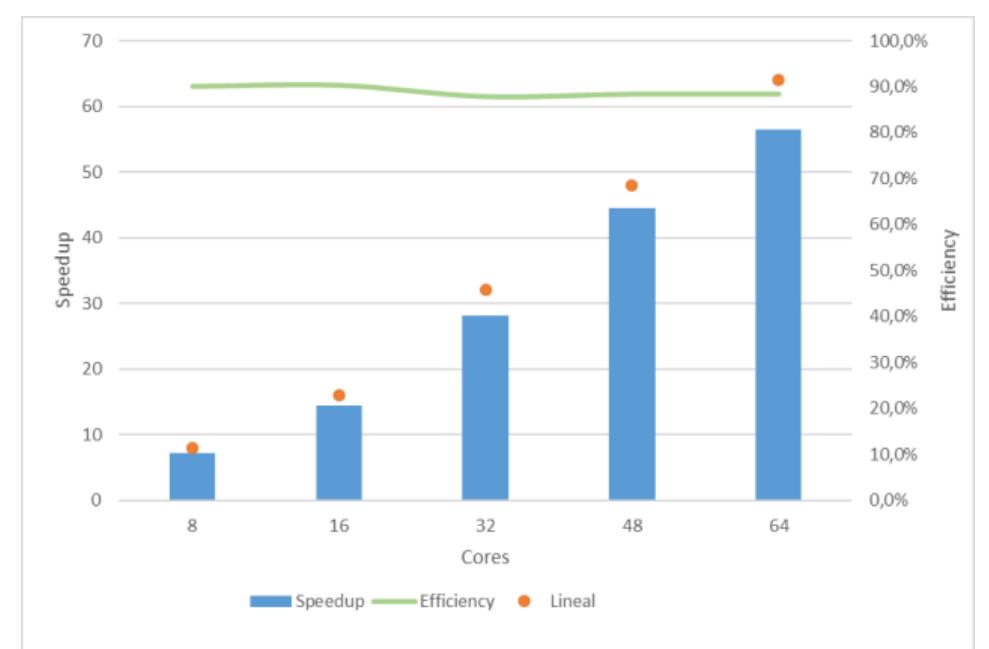

Figure 7 Speedup and efficiency over 32 cores for different sizes of simulated space (up to x6). Although this is not an issue imposed by the HPC architecture, it is necessary consider this situation to orient to researcher to introduce changes in the model. The researcher should convert the model (or redesign) to avoid the large model data utilization which will result in a performance reductions. The memory problems for large models is a common issue in the Netlogo community and basically are defined by the amount of memory required to represent the agent’s elements and by the constraints imposed by the Java runtime environment.