Contestable AI by Design: Towards a Framework

Minds and Machines

https://doi.org/10.1007/S11023-022-09611-ZAbstract

As the use of AI systems continues to increase, so do concerns over their lack of fairness, legitimacy and accountability. Such harmful automated decision-making can be guarded against by ensuring AI systems are contestable by design: responsive to human intervention throughout the system lifecycle. Contestable AI by design is a small but growing field of research. However, most available knowledge requires a significant amount of translation to be applicable in practice. A proven way of conveying intermediate-level, generative design knowledge is in the form of frameworks. In this article we use qualitative-interpretative methods and visual mapping techniques to extract from the literature sociotechnical features and practices that contribute to contestable AI, and synthesize these into a design framework.

References (89)

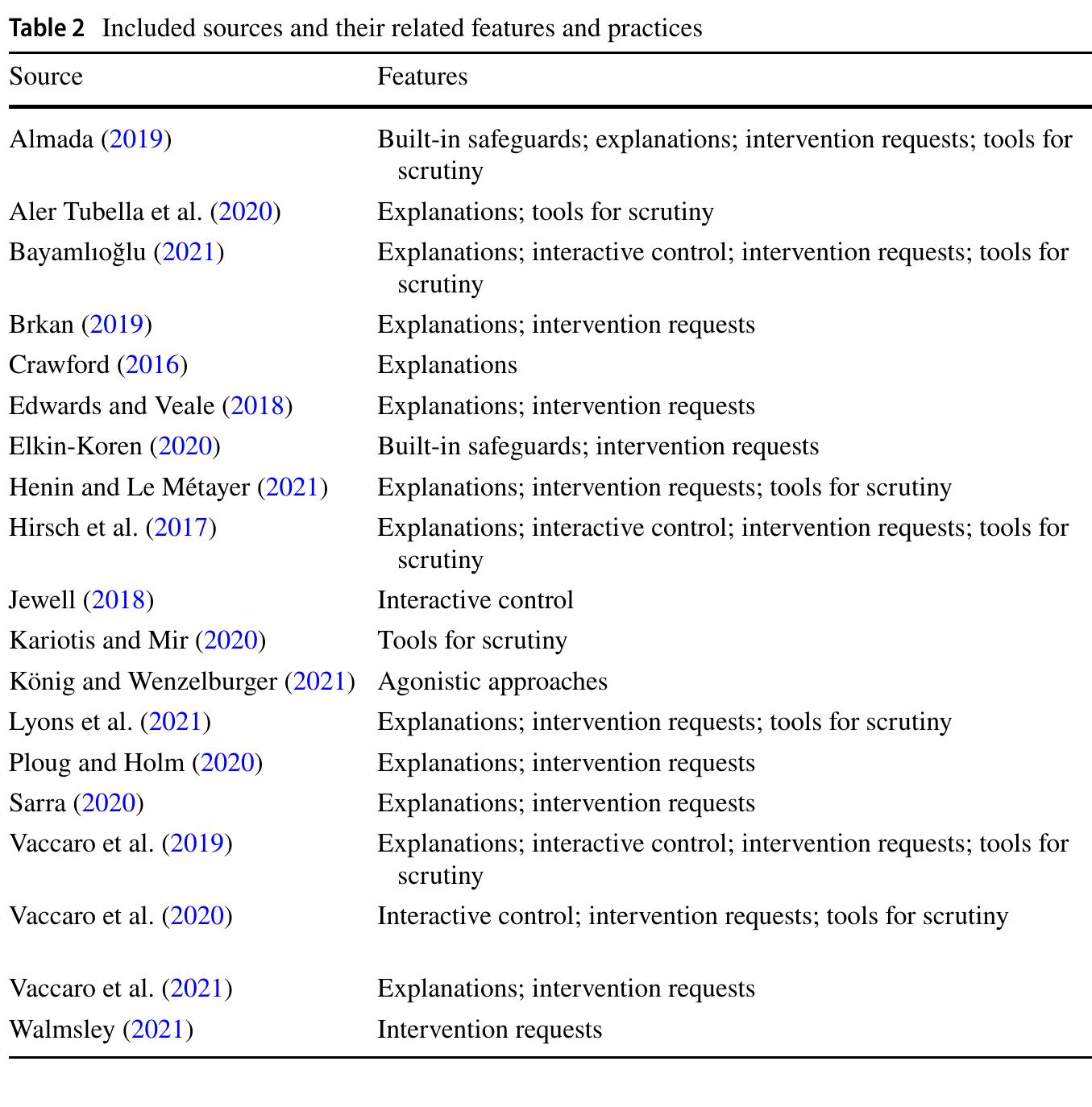

- Interactive control over automated decisions Bayamlıoğlu (2021), Hirsch et al. (2017), Jewell (2018), Vaccaro et al. (2019, 2020) Explanations of system behavior Aler Tubella et al. (2020), Almada (2019), Bayamlıoğlu (2021), Brkan (2019), Crawford (2016), Edwards and Veale (2018), Henin and Le Métayer (2021), Hirsch et al. (2017), Lyons et al. (2021), Ploug and Holm (2020), Sarra (2020), Vaccaro et al. (2019, 2021)

- Human review and intervention requests Almada (2019), Bayamlıoğlu (2021), Brkan (2019), Edwards and Veale (2018), Elkin-Koren (2020), Henin and Le Métayer (2021), Hirsch et al. (2017), Lyons et al. (2021), Ploug and Holm (2020), Sarra (2020), Vaccaro et al. (2019, 2020, 2021), Walmsley (2021)

- Tools for scrutiny by subjects or third parties Aler Tubella et al. (2020), Almada (2019), Bayamlıoğlu (2021), Henin and Le Métayer (2021), Hirsch et al. (2017), Kariotis and Mir (2020), Lyons et al. (2021), Vaccaro et al. (2019, 2020)

- Table 4 Practices contributing to contestable AI Practice Sources Ex-ante safeguards Aler Tubella et al. (2020), Almada (2019), Bayamlıoğlu (2021), Brkan (2019), Edwards and Veale (2018), Henin and Le Métayer (2021), Hirsch et al. (2017), Kariotis and Mir (2020), Lyons et al. (2021), Sarra (2020), Walmsley (2021) Agonistic approaches to ML development Almada (2019), Henin and Le Métayer (2021), Kariotis and Mir (2020), König and Wenzelburger (2021), Vaccaro et al. (2019, 2021) Quality assurance during development Almada (2019), Elkin-Koren (2020), Hirsch et al. (2017), Kariotis and Mir (2020), Ploug and Holm (2020), Vaccaro et al. (2019, 2020), Walmsley (2021) Quality assurance after deployment Aler Tubella et al. (2020), Almada (2019), Bayamlıoğlu (2021), Hirsch et al. (2017), Vaccaro et al. (2020, 2021), Walmsley (2021) Risk mitigation strategies Hirsch et al. (2017), Lyons et al. (2021), Ploug and Holm (2020), Vaccaro et al. (2019, 2020)

- Third-party oversight Bayamlıoğlu (2021), Crawford (2016), Edwards and Veale (2018), Elkin-Koren (2020), Lyons et al. (2021), Vaccaro et al. (2019, 2020)

- References Aler Tubella, A., Theodorou, A., Dignum, V., et al. (2020). Contestable black boxes. In V. Gutiérrez- Basulto, T. Kliegr, A. Soylu, et al. (Eds.), Rules and reasoning (Vol. 12173). Springer.

- Almada, M. (2019). Human intervention in automated decision-making: Toward the construction of con- testable systems. In Proceedings of the 17th International Conference on Artificial Intelligence and Law, ICAIL 2019, pp 2-11

- Ananny, M., & Crawford, K. (2018). Seeing without knowing: Limitations of the transparency ideal and its application to algorithmic accountability. New Media and Society, 20(3), 973-989.

- Applebee, A. N., & Langer, J. A. (1983). Instructional scaffolding: Reading and writing as natural lan- guage activities. Language Arts, 60(2), 168-175 http:// www. jstor. org/ stable/ 41961 447.

- Bayamlıoğlu, E. (2021). The right to contest automated decisions under the General Data Protection Reg- ulation: Beyond the so-called "right to explanation". Regulation and Governance.

- Binns, R., & Gallo, V. (2019). An overview of the Auditing Framework for Artificial Intelligence and its core components. https:// ico. org. uk/ about-the-ico/ news-and-events/ ai-blog-an-overv iew-of-the- audit ing-frame work-for-artifi cial-intel ligen ce-and-its-core-compo nents/

- Braun, M., Bleher, H., & Hummel, P. (2021). A leap of faith: Is there a formula for "trustworthy'' AI? Hastings Center Report, 51(3), 17-22. https:// doi. org/ 10. 1002/ hast. 1207.

- Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychol- ogy, 3(2), 77-101.

- Brkan, M. (2019). Do algorithms rule the world? Algorithmic decision-making and data protection in the framework of the GDPR and beyond. International Journal of Law and Information Technology, 27(2), 91-121.

- Cavalcante Siebert, L., Lupetti, M. L., & Aizenberg, E., et al. (2022). Meaningful human control: Action- able properties for AI system development. AI and Ethics.

- Chiusi, F., Fischer, S., & Kayser-Bril, N., et al. (2020). Automating Society Report 2020. Tech. rep., Algorithm Watch, https:// autom ating socie ty. algor ithmw atch. org

- Cobbe, J., Lee, M. S. A., & Singh, J. (2021). Reviewable Automated Decision-Making: A Framework for Accountable Algorithmic Systems. In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency. Association for Computing Machinery, Virtual Event, pp 598- 609, https:// doi. org/ 10. 1145/ 34421 88. 34459 21

- Cowgill, B., & Tucker, C. (2017). Algorithmic bias: A counterfactual perspective. Working Paper: NSFTrustworthy Algorithms p 3. http:// trust worthy-algor ithms. org/ white papers/ Bo Cowgi ll. pdf

- Crawford, K. (2016). Can an algorithm be agonistic? Ten scenes from life in calculated publics. Science, Technology, & Human Values, 41(1), 77-92

- Crawford, K., Dobbe, R., & Dryer, T., et al. (2019). AI now 2019 report. Technical report, AI Now Insti- tute. https:// ainow insti tute. org/ AI_ Now_ 2019_ Report. html

- Davis, J. (2009). Design methods for ethical persuasive computing. In Proceedings of the 4th interna- tional conference on persuasive technology. Association for Computing Machinery, Persuasive '09.

- de Sio, F. S., & van den Hoven, J. (2018). Meaningful human control over autonomous systems: A philo- sophical account. Frontiers Robotics AI, 5, 1-14.

- Dorst, K., & Cross, N. (2001). Creativity in the design process: Co-evolution of problem-solution. Design Studies, 22(5), 425-437.

- Dourish, P. (2004). What we talk about when we talk about context. Personal and Ubiquitous Computing, 8(1), 19-30.

- Edwards, L., & Veale, M. (2018). Enslaving the algorithm: From a "right to an explanation'' to a "right to better decisions''? IEEE Security & Privacy, 16(3), 46-54.

- Elkin-Koren, N. (2020). Contesting algorithms: Restoring the public interest in content filtering by artifi- cial intelligence. Big Data & Society, 7(2), 205395172093,229.

- Franssen, M. (2015). Design for values and operator roles in sociotechnical systemsociotechnical sys- tems. In: van den Hoven J, Vermaas PE, van de Poel I (Eds.) Handbook of Ethics, Values, and Tech- nological Design. Springer, pp 117-149, https:// doi. org/ 10. 1007/ 978-94-007-6970-0_8

- Gebru, T., Morgenstern, J., & Vecchione, B., et al. (2020). Datasheets for datasets. arXiv: 1803. 09010 [cs]

- Geuens, J., Geurts, L., Swinnen, T. W., et al. (2018). Turning tables: A structured focus group method to remediate unequal power during participatory design in health care. In Proceedings of the 15th participatory design conference: Short papers, situated actions, workshops and tutorial -Volume 2. ACM, Hasselt and Genk, pp 1-5.

- Goodman, B. (2016). Economic models of (algorithmic) discrimination. In 29th conference on neural information processing systems

- Henin, C., & Le Métayer, D. (2021). Beyond explainability: Justifiability and contestability of algorith- mic decision systems. AI & Society

- Hildebrandt, M. (2017). Privacy as protection of the incomputable self: Agonistic machine learning. SSRN Electronic Journal 1-33.

- Hirsch, T., Merced, K., Narayanan, S., et al. (2017). Designing contestability: Interaction design, machine learning, and mental health. In DIS 2017 -Proceedings of the 2017 ACM conference on designing interactive systems. ACM Press, pp 95-99.

- Höök, K., Karlgren, J., & Waern, A., et al. (1998). A glass box approach to adaptive hypermedia. In: Brusilovsky P, Kobsa A, Vassileva J (Eds.) Adaptive hypertext and hypermedia. Springer, pp 143- 170, https:// doi. org/ 10. 1007/ 978-94-017-0617-9_6

- Höök, K., & Löwgren, J. (2012). Strong concepts: Intermediate-level knowledge in interaction design research. ACM Transactions on Computer-Human Interaction, 19(3), 1-18.

- Hutchinson, B., Smart, A., & Hanna, A., et al. (2021). Towards accountability for machine learning data- sets: Practices from software engineering and infrastructure. In Proceedings of the 2021 ACM con- ference on fairness, accountability, and transparency. ACM, Virtual Event Canada, pp 560-575.

- Jewell, M. (2018). Contesting the decision: Living in (and living with) the smart city. International Review of Law, Computers and Technology.

- Jobin, A., Ienca, M., & Vayena, E. (2019). The global landscape of AI ethics guidelines. Nature Machine Intelligence, 1(9), 389-399.

- Johnson, D. W. (2003). Social interdependence: Interrelationships among theory, research, and practice. American Psychologist, 58(11), 934-945.

- Kamarinou, D., Millard, C., & Singh, J. (2016). Machine learning with personal data. Queen Mary School of Law Legal Studies Research Paper, 1(247), 23.

- Kariotis, T., & Mir, D. J. (2020). Fighting back algocracy: The need for new participatory approaches to technology assessment. In Proceedings of the 16th Participatory Design Conference 2020 - Participation(s) Otherwise -Volume 2. ACM, Manizales Colombia, pp 148-153.

- Katell, M., Young, M., & Dailey, D., et al. (2020). Toward situated interventions for algorithmic equity: Lessons from the field. In Proceedings of the 2020 conference on fairness, accountability, and transparency. Association for Computing Machinery, pp 45-55, https:// doi. org/ 10. 1145/ 33510 95. 33728 74

- Kluttz, D., Kohli, N., & Mulligan, D. K. (2018). Contestability and professionals: From explanations to engagement with algorithmic systems. SSRN Electronic Journal. https:// doi. org/ 10. 2139/ ssrn. 33118

- Kluttz, D. N., & Mulligan, D. K. (2019). Automated decision support technologies and the legal profes- sion. Berkeley Technology Law Journal, 34(3), 853. https:// doi. org/ 10. 15779/ Z3815 4DP7K.

- Kluttz, D. N., Mulligan, D. K., Mulligan, D. K., et al. (2019). Shaping our tools: Contestability as a means to promote responsible algorithmic decision making in the professions. SSRN Electronic Journal. https:// doi. org/ 10. 2139/ ssrn. 33118 94.

- König, P. D., & Wenzelburger, G. (2021). The legitimacy gap of algorithmic decision-making in the pub- lic sector: Why it arises and how to address it. Technology in Society, 67(101), 688.

- Kroes, P., Franssen, M., van de Poel, I., et al. (2006). Treating socio-technical systems as engineering systems: Some conceptual problems. Systems Research and Behavioral Science, 23(6), 803-814.

- Kroll, J. A., Barocas, S., Felten, E. W., et al. (2016). Accountable algorithms. U Pa L Rev, 165, 633.

- Leahu, L. (2016). Ontological surprises: A relational perspective on machine learning. In Proceedings of the 2016 ACM conference on designing interactive systems. ACM, pp 182-186

- Leydens, J. A., & Lucena, J. C. (2018). Engineering justice: Transforming engineering education and practice. IEEE PCS Professional Engineering Communication Series. Wiley.

- Löwgren, J., Gaver, B., & Bowers, J. (2013). Annotated Portfolios and other forms of intermediate-level knowledge. Interactions pp 30-34.

- Lyons, H., Velloso, E., & Miller, T. (2021). Conceptualising contestability: Perspectives on contesting algorithmic decisions. Proceedings of the ACM on Human-Computer Interaction, 5(CSCW1), 1-25.

- Mahendran, A., & Vedaldi, A. (2015). Understanding deep image representations by inverting them. In Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR)

- Matias, J. N., Johnson, A., Boesel, W. E., et al. (2015). Reporting, reviewing, and responding to harass- ment on twitter. https:// doi. org/ 10. 48550/ ARXIV. 1505. 03359

- Mendoza, I., & Bygrave, L. A. (2017). The right not to be subject to automated decisions based on profil- ing. In EU Internet Law. Springer, pp 77-98

- Methnani, L., Aler Tubella, A., & Dignum, V., et al. (2021). Let me take over: Variable autonomy for meaningful human control. Frontiers in Artificial Intelligence 4.

- Mitchell, M., Wu, S., Zaldivar, A., et al. (2019). Model cards for model reporting. In Proceedings of the conference on fairness, accountability, and transparency. ACM, pp 220-229

- Moher, D., Liberati, A., Tetzlaff, J., et al. (2009). Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. PLoS Medicine, 6(7), e1000,097.

- Mohseni, S. (2019). Toward design and evaluation framework for interpretable machine learning systems. In Proceedings of the 2019 AAAI/ACM conference on AI, ethics, and society. ACM, pp. 553-554.

- Morley, J., Floridi, L., Kinsey, L., et al. (2019). From what to how. An overview of AI ethics tools, methods and research to translate principles into practices. Science and Engineering Ethics, 26, 2141-2168.

- Myers West, S. (2018). Censored, suspended, shadowbanned: User interpretations of content moderation on social media platforms. New Media & Society, 20(11), 4366-4383.

- Nissenbaum, H. (2011). A contextual approach to privacy online. Daedalus, 140(4), 32-48.

- Norman, D. A., & Stappers, P. J. (2015). DesignX: Complex sociotechnical systems. She Ji: The Journal of Design, Economics, and Innovation, 1(2), 83-106.

- Novick, D. G., & Sutton, S. (1997). What is mixed-initiative interaction. In Proceedings of the AAAI spring symposium on computational models for mixed initiative interaction, p 12.

- Obrenović, Ž. (2011). Design-based research: What we learn when we engage in design of interactive systems. Interactions, 18(5), 56-59.

- Ouzzani, M., Hammady, H., Fedorowicz, Z., et al. (2016). Rayyan: A web and mobile app for systematic reviews. Systematic Reviews, 5(1), 210.

- Ploug, T., & Holm, S. (2020). The four dimensions of contestable AI diagnostics: A patient-centric approach to explainable AI. Artificial Intelligence in Medicine, 107(101), 901.

- Raji, I. D., Smart, A., White, R. N., et al. (2020). Closing the AI accountability gap: Defining an end-to- end framework for internal algorithmic auditing. FAT* 2020-Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency pp 33-44

- Ribeiro, M. T., Singh, S., & Guestrin, C. (2016). "Why should i trust you?": Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining. ACM, pp 1135-1144.

- Rouvroy, A. (2012). The end(s) of critique: Data-behaviourism vs. due-process. In: Hildebrandt M, De Vries E (Eds.) Privacy, due process and the computational turn. Philosophers of Law Meet Phi- losophers of Technology.

- Salehi, N., Teevan, J., Iqbal, S., et al. (2017). Communicating context to the crowd for complex writing tasks. In: Proceedings of the 2017 ACM conference on computer supported cooperative work and social computing. ACM, Portland, pp 1890-1901.

- Sandvig, C., Hamilton, K., Karahalios, K., et al. (2014). Auditing algorithms: Research methods for detecting discrimination on internet platforms. In: Data and discrimination: Converting critical concerns into productive inquiry.

- Sarra, C. (2020). Put dialectics into the machine: Protection against automatic-decision-making through a deeper understanding of contestability by design. Global Jurist, 20(3), 20200,003.

- Schot, J., & Rip, A. (1997). The past and future of constructive technology assessment. Technological Forecasting and Social Change, 54(2-3), 251-268.

- Selbst, A. D., & Barocas, S. (2018). The intuitive appeal of explainable machines. SSRN Electronic Journal.

- Shneiderman, B. (2020). Bridging the gap between ethics and practice: Guidelines for reliable, safe, and trustworthy human-centered AI systems. ACM Transactions on Interactive Intelligent Systems, 10(4), 1-31.

- Sloane, M., Moss, E., Awomolo, O., et al. (2020). Participation is not a design fix for machine learning. arXiv: 2007. 02423 [cs]

- Stolterman, E., & Wiberg, M. (2010). Concept-driven interaction design research. Human-Computer Interaction, 25(2), 95-118.

- Suchman, L. (2018). Corporate accountability. https:// robot futur es. wordp ress. com/ 2018/ 06/ 10/ corpo rate- accou ntabi lity/

- Tickle, A., Andrews, R., Golea, M., et al. (1998). The truth will come to light: Directions and challenges in extracting the knowledge embedded within trained artificial neural networks. IEEE Transactions on Neural Networks, 9(6), 1057-1068.

- Tonkinwise, C. (2016). The interaction design public intellectual. Interactions, 23(3), 24-25).

- Umbrello, S. (2021). Coupling levels of abstraction in understanding meaningful human control of auton- omous weapons: A two-tiered approach. Ethics and Information Technology, 23(3), 455-464.

- Vaccaro, K., Karahalios, K., Mulligan, D. K., et al. (2019). Contestability in algorithmic systems. In Con- ference companion publication of the 2019 on computer supported cooperative work and social computing. ACM, pp 523-527

- Vaccaro, K., Sandvig, C., & Karahalios, K. (2020). At the end of the day Facebook does what it wants: How users experience contesting algorithmic content moderation. In Proceedings of the ACM on human-computer interaction 4.

- Vaccaro, K., Xiao, Z., Hamilton, K., et al. (2021). Contestability for content moderation. In: Proceedings of the ACM on human-computer interaction, pp 1-28.

- van de Poel, I. (2020). Embedding values in artificial intelligence (AI) systems. Minds and machines Verbeek, P. P. (2015). Beyond interaction: A short introduction to mediation theory. Interactions, 22(3), 26-31.

- Verdiesen, I., Santoni de Sio, F., & Dignum, V. (2021). Accountability and control over autonomous weapon systems: A framework for comprehensive human oversight. Minds and Machines, 31(1), 137-163.

- Walmsley, J. (2021). Artificial intelligence and the value of transparency. AI & SOCIETY, 36(2), 585-595.

- Winner, L. (1980). Do artifacts have politics? Daedalus, 109(1), 121-136.