Paradigms for Parallel Computation

2008, 2008 DoD HPCMP Users Group Conference

https://doi.org/10.1109/DOD.HPCMP.UGC.2008.18Abstract

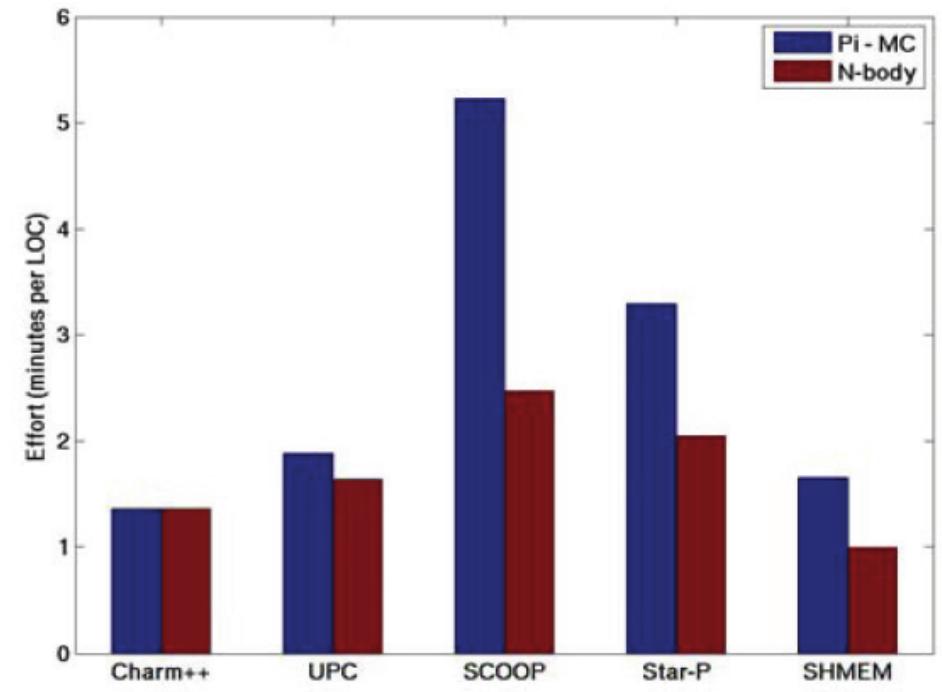

Message passing, as implemented in message passing interface (MPI), has become the industry standard for programming on distributed memory parallel architectures, while the threading on shared memory machines is typically implemented in OpenMP. Outstanding performance has been achieved with these methods, but only on a relatively small number of codes, requiring expert tuning, particularly as system size increases. With the advent of multicore/manycore microprocessors, and continuing scaling in the size of systems, an inflection point may be nearing that will require a substantial shift in the way large scale systems are programmed to maintain productivity. The parallel paradigms project was undertaken to evaluate the nearterm readiness of a number of emerging ideas in parallel programming, with specific emphasis on their applicability to applications in the User Productivity Enhancement and Technology Transfer (PET) Electronics, Networking, and Systems/C4I (ENS) focus area. The evaluation included examinations of usability, performance, scalability, and support for fault tolerance. Applications representative of ENS problems were ported to each of the evaluated languages, along with a set of "dwarf" codes representing a broader workload. In addition, a user study was undertaken where teaching modules were developed for each paradigm, and delivered to groups of both novice and expert programmers to measure productivity. Results will be presented from six paradigms currently undergoing ongoing evaluation. Experiences with each of these models will be presented, including performance of applications re-coded across these models and feedback from users.

References (9)

- Ayubi-Moak, J., S.M. Goodnick, G. Speyer, and D. Stanzione, Jr., "Parallel FDTD Simulator for Photonic Crystals." High Performance Computing Modernization Program Users Group Conference, Pittsburgh, PA, June 19, 2007

- Chen, G. and B.K. Szymanski, "Lookahead, Rollback, and Lookback, Searching for Parallelism in Discrete Event Simulation." Proc. SCS 2002.

- Patterson, Yelick et al., "The Landscape of Parallel Computing Research: A View from Berkeley." Technical Report No. UCB/EECS-2006-183, University of California, Berkeley, December 18, 2006.

- Feo, J.T., A Comparative Study of Parallel Programming Languages: The Salishan Problems, Elsevier, 1992.

- Hochstein, L., J. Carver, F. Shull, S. Asgari, V. Basili, J. Hollingsworth, M. Zelkowitz, "Parallel Programmer Productivity: A Case Study of Novice HPC Programmers." Proceedings of Supercomputing 2005 (SC 05), Seattle, WA, November 2005

- Kepner, J. and D. Koester, "HPCS Application Analysis and Assessment." HPCS Productivity Team Workshop, 2005.

- Kepner, "HPC Productivity{ An Overarching View." International Journal of High Performance Computing Applications, Vol. 18, No. 4, pp. 393-397, 2004.

- Margery, Vallee et al., "Kerrighed: A SSI Cluster OS Running OpenMP." http://www.inria.fr/rrrt/rr-4947.html.

- Compton, Michael and Richard Walker, "A Run-time System for SCOOP." Journal of Object Technology, volume 1, number 3, special issue TOOLS USA 2002 proceedings, pp. 119-157.

Dan Stanzione

Dan Stanzione