Advanced Technologies for Cloud Storage

https://doi.org/10.13140/RG.2.1.1335.2562…

29 pages

1 file

Sign up for access to the world's latest research

Abstract

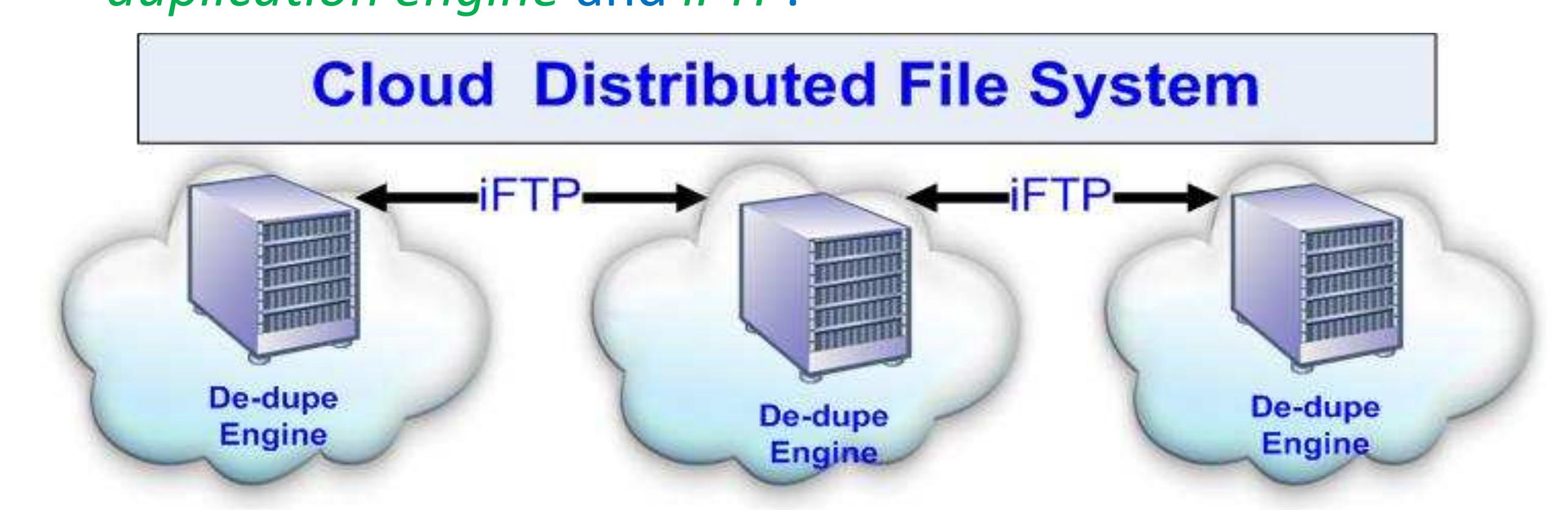

It talks about three technologies for big data. (a) Data de-duplication to reduce cloud storage cost; (b) File Sync Protocols to reduce traffic between clouds & between users and clouds; (c) High speed file transport protocols.

Key takeaways

AI

AI

- Data de-duplication significantly reduces cloud storage costs and improves efficiency.

- File synchronization protocols enhance traffic management between users and clouds.

- High-speed file transport protocols leverage UDP to address TCP limitations in large file transfers.

- The text aims to discuss advanced technologies addressing challenges in big data cloud storage.

- Key challenges include data security, availability, performance, and regulatory compliance.

Related papers

Advances in data mining and database management book series, 2018

Cloud Computing is a new computing model that distributes the computation on a resource pool. The need for a scalable database capable of expanding to accommodate growth has increased with the growing data in web world. More familiar Cloud Computing vendors such as Amazon Web Services, Microsoft, Google, IBM and Rackspace offer cloud based Hadoop and NoSQL database platforms to process Big Data applications. Variety of services are available that run on top of cloud platforms freeing users from the need to deploy their own systems. Nowadays, integrating Big Data and various cloud deployment models is major concern for Internet companies especially software and data services vendors that are just getting started themselves. This chapter proposes an efficient architecture for integration with comprehensive capabilities including real time and bulk data movement, bi-directional replication, metadata management, high performance transformation, data services and data quality for customer and product domains.

International journal of scientific and research publications, 2022

Big Data is emerging technology era implicates to handle huge amount of data to store, retrieve, manage, analyzed and processing. Big Data handles data like structured , semi structured and unstructured data for different applications like Ecommerce, Hospital, HealthCare, Social Media, Cloud Computing, IOT based and many more. for storage, management and processing different tools are required to handle such peta and tera bytes amount of data. Traditional tools was not unable to perform management and analysis of complex, semi-structured ,and unstructured data. Big Data has different tools to handle, managed, querying different categories of mainly unstructured and semi-structured data using like Cassandra, Spark, Hadoop, Map-Reduce, Couch DB, Mongo DB and many more tools helping out developers to perform different operations on it.

Technology transfer: fundamental principles and innovative technical solutions

Big Data has been created from virtually everything around us at all times. Every digital media interaction generates data, from computer browsing and online retail to iTunes shopping and Facebook likes. This data is captured from multiple sources, with terrifying speed, volume and variety. But in order to extract substantial value from them, one must possess the optimal processing power, the appropriate analysis tools and, of course, the corresponding skills. The range of data collected by businesses today is almost unreal. According to IBM, more than 2.5 times four million data bytes generated per year, while the amount of data generated increases at such an astonishing rate that 90 % of it has been generated in just the last two years. Big Data have recently attracted substantial interest from both academics and practitioners. Big Data Analytics (BDA) is increasingly becoming a trending practice that many organizations are adopting with the purpose of constructing valuable inform...

Journal of Grid Computing, 2018

Big Data represents a major challenge for the performance of the cloud computing storage systems. Some distributed file systems (DFS) are widely used to store big data, such as Hadoop Distributed File System (HDFS), Google File System (GFS) 1 0 and others. These DFS replicate and store data as multiple copies to provide availability and reliability, but they increase storage and resources consumption.

IJSRCSEIT, 2019

The major objective of this paper is to present Big Data Storage techniques and Data Virtualization. The Data virtualization servers have focused on making big data processing easy. They can hide the complex and technical interfaces of big data storage technologies, such as Hadoop and NoSQL, and they can present big data as if it is stored in traditional SQL systems. This allows us as developers to use our own existing skills and to deploy our traditional ETL, reporting, and analytical tools that all support SQL. Additionally, the products and our existing skills can extend the data security mechanisms for accessing and processing big data across multiple big data systems. But with scale and performance rising, making big data processing is not enough and easy anymore. As such, the next challenge for data virtualization is parallel to big data processing. In this paper, All of the above regular issues are covered in my paper along with their proper prospects.

International Journal of Computer Applications, 2015

Today, in order to support decision for strategic advantages alignment, companies' have started to realize the importance of using large data. It is being observed through different study cases that "Large data usually demands for faster processing". As a result, companies are now investing more in processing larger sets of data rather than investing in expensive algorithms. A larger amount of data gives a better inference for decision making but also working with it can create challenge due to processing limitations. In order to easily manage and use this large amount of content in a proper systematic manner, Big Data, HDFS & other file systems were being introduced.

Future Generation Computer Systems, 2019

Big Data represents a major challenge for the performance of the cloud computing storage systems. Some distributed file systems (DFS) are widely used to store big data, such as Hadoop Distributed File System (HDFS), Google File System (GFS) 1 and others. These DFS replicate and store data as multiple copies to provide availability and reliability, but they increase storage and resources consumption. In a previous work (Kaseb, Khafagy, Ali, & Saad, 2018), we built a Redundant Independent Files (RIF) system over a cloud provider (CP), called CPRIF, which provides HDFS without replica, to improve the overall performance through reducing storage space, resources consumption, operational costs and improved the writing and reading performance. 1 However, RIF suffers from limited availability, limited reliability and increased data recovery time. In this paper, we overcome the limitations of the RIF system by giving more chances to recover a lost block (availability) and the ability of the system to keep working the presence of a lost block (reliability) with less computation (time overhead). As well as keeping the benefits of storage and resources consumption attained by RIF compared to other systems. We call this technique "High Availability Redundant Independent Files" (HARIF), which is built over CP; called CPHARIF. 2 According to the experimental results of the HARIF system using the TeraGen benchmark, it is found that the execution time of recovering data, availability and reliability using HARIF have been improved as compared with RIF. Also, the stored data size and resources consumption with HARIF system is reduced compared to the other systems. The Big Data storage is saved and the data writing and reading are improved.

2016

In the current scenario, big data is the biggest challenge for the industries to deal with. It is characterized by Huge Volume, Heterogeneous unidentified sources, High rate of data generation, inability to extract value information from irrelevant data. There are many approaches been put forward for dealing with this Big Data, some of them are RDBMS, Hadoop, Cloud Computing etc. This review article includes an elicitation of definitions of Big Data from some previous work, its characteristics, applications, various implementation techniques proposed for dealing with Big Data. It also discusses about some of the benchmarks which are proposed by companies.

Loading Preview

Sorry, preview is currently unavailable. You can download the paper by clicking the button above.

Liwei Ren

Liwei Ren